Large-Scale Image Annotation using Visual Synset

(ICCV 2011)

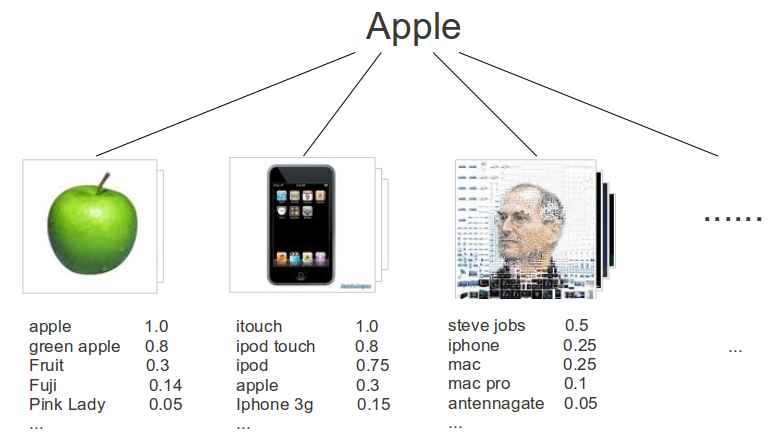

Illustration of Visual Synsets |

| Authors |

David Tsai |

| Abstract |

| We address the problem of large-scale annotation of web images. Our approach is based on the concept of visual synset, which is an organization of images which are visually-similar and semantically-related. Each visual synset represents a single prototypical visual concept, and has an associated set of weighted annotations. Linear SVM’s are utilized to predict the visual synset membership for unseen image examples, and a weighted voting rule is used to construct a ranked list of predicted annotations from a set of visual synsets. We demonstrate that visual synsets lead to better performance than standard methods on a new annotation database containing more than 200 million im- ages and 300 thousand annotations, which is the largest ever reported. |

| Paper |

| Supplementary Material |

| Download ZIP (6.9 MB) |

| Citation |

@article{TsaiICCV11,

author = {David Tsai and Yushi Jing and Yi Liu and Henry A.Rowley and Sergey Ioffe and James M.Rehg},

title = {Large-Scale Image Annotation using Visual Synset},

journal = {ICCV},

year = {2011},

} |

| Poster |

| Download Poster (1.4 MB) |

| Data |

| Readme Part1 (959 MB) Part2 (579MB) |

| Funding |

This research is supported by:

|

| Copyright |

The documents contained in these directories are included by the contributing authors as a means to ensure timely dissemination of scholarly and technical work on a non-commercial basis. Copyright and all rights therein are maintained by the authors or by other copyright holders, notwithstanding that they have offered their works here electronically. It is understood that all persons copying this information will adhere to the terms and constraints invoked by each author's copyright. These works may not be reposted without explicit permission of the copyright holder. |

|